AI systems can identify emotional states of patients

Psychiatry specialists often use less reliable, indirect methods such as skin conductance measurements

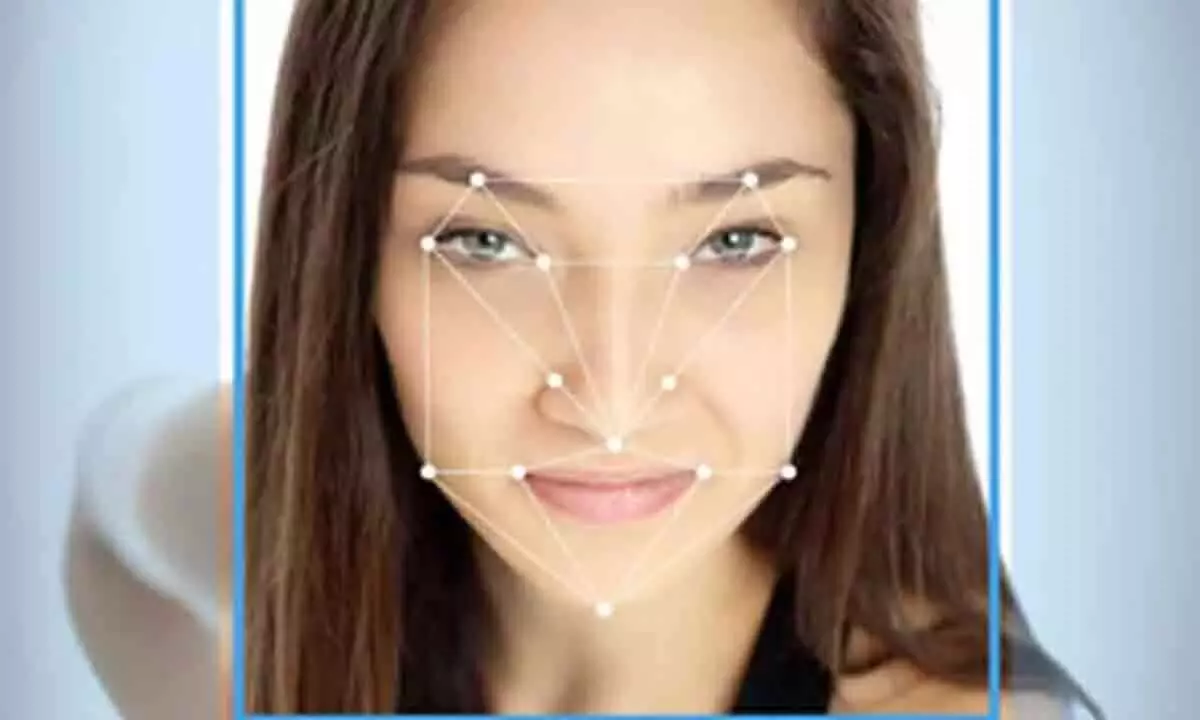

image for illustrative purpose

London: AI systems can reliably determine the emotional states of patients as accurately as a psychotherapist, according to research.

The face reflects a person's emotional state. The interpretation of facial expressions as part of psychotherapy or psychotherapeutic research, for example, is an effective way of characterising how a person is feeling in that particular moment.

But the process of analysing and interpreting recorded facial expressions as part of research projects or psychotherapy is extremely time-consuming, which is why psychiatry specialists often use less reliable, indirect methods such as skin conductance measurements, which can also be a measure of emotional arousal.

Researchers at the University of Basel in Switzerland said AI could become an important tool in therapy and research.

AI systems could be used in the analysis of existing video recordings from research studies in order to detect emotionally relevant moments in a conversation more easily and more directly. This ability could also help support the supervision of psychotherapists, they noted in the study published in the journal Psychopathology.

To determine whether AI systems can reliably determine the emotional states of patients in video recordings, they used freely available artificial neural networks that were trained in the detection of six basic emotions (happiness, surprise, anger, disgust, sadness and fear) using more than 30,000 facial photos.

This AI system then analysed video data from therapy sessions with a total of 23 patients with borderline personality pathology. The high-performance computer had to process more than 950 hours of video recordings for this study.

The results were astonishing: Statistical comparisons between the analysis of three trained therapists and the AI system showed a remarkable level of agreement, the researchers said.

The AI system assessed facial expressions as reliably as a human but was also able to detect even the most fleeting of emotions within the millisecond range, such as a brief smile or expression of disgust. These types of micro expressions have the potential to be missed by therapists or may only be perceived subconsciously. The AI system is therefore able to measure fleeting emotions with an increased level of sensitivity compared to trained therapists. "We were really surprised to find that relatively simple AI systems can allocate facial expressions to their emotional states so reliably," said Dr. Martin Steppan, psychologist at the University’s Faculty of Psychology.